Introducing the Ambisonic Toolkit Extension

Extension

Extension

ExtensionThe paradigm

The Ambisonic Toolkit (ATK) brings together a number of classic and novel tools and transforms for the artist working with Ambisonic surround sound and makes these available to the SuperCollider3 user. The toolset in intended to be both ergonomic and comprehensive, and is framed so that the user is encouraged to ‘think Ambisonically’. By this, it is meant the ATK addresses the holistic problem of creatively controlling a complete soundfield, allowing and encouraging the artist to think beyond the placement of sounds in a sound-space (sound-scene paradigm). Instead the artist is encouraged to attend to the impression and imaging of a soundfield, therefore taking advantage of the native soundfield-kernel paradigm the Ambisonic technique presents.

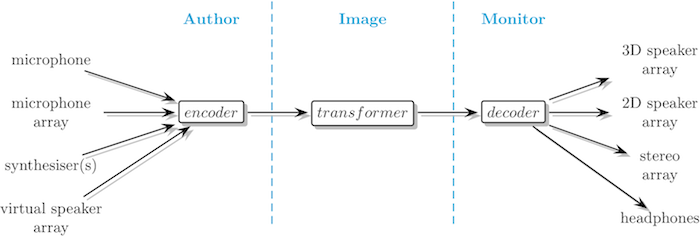

The ATK's production model is illustrated below:

ATK paradigm

Here you'll see that the ATK breaks down the task of working with Ambisonics into three separate elements:

- Author

- Capture or synthesise an Ambisonic soundfield.

- Image

- Spatially filter an Ambisonic soundfield.

- Monitor

- Playback or render an Ambisonic soundfield.

The below sections go into more detail as to the specifics of each task. For examples that show more concise examples for usage in SynthDef and NRT, see SynthDef and NRT examples for ATK

Authoring

Most users approaching Ambisonics are usually presented with two avenues to author an Ambisonic soundfield: capture a natural soundfield directly with a Soundfield microphone, 1 or author a planewave from a monophonic signal.2 SuperCollider's inbuilt PanB provides the latter solution.

The ATK provides a much wider palate of authoring tools via FoaEncode. These include:

- planewave: classic directional encoding

- omnidirectional: a soundfield from everywhere

- virtual loudspeaker array: transcoding standard formats

- pseudoinverse microphone array: encoding from discrete microphones or signals

The pseudoinverse encoding technique provides the greatest flexibility, and can be used with both microphone arrays and synthetic signals. In the absence of a Soundfield microphone, this feature gives the opportunity to deploy real-world microphone arrays (omni, cardioid, etc.) to capture natural soundfields. With synthetic signals, pseudoinverse encoding is usually regarded as the method of choice to generate spatially complex synthetic Ambisonic images. In combination with the ATK's imaging tools these can then be compositionally controlled as required.

See FoaEncode, FoaEncoderMatrix and FoaEncoderKernel for more details about encoding.

Imaging

For the artist, the real power of the ATK is found in the imaging transforms. These are spatial domain filters which reorient, reshape or otherwise spatially filter an input soundfield. Many users will be familiar with the soundfield rotation transform, as SuperCollider provides the inbuilt Rotate2.

The ATK provides a much wider and comprehensive toolset, including:

- rotation: soundfield rotation about an axis

- mirror: soundfield reflection across an axis

- directivity: soundfield directivity

- dominance: adjust directional gain of soundfield

- focus: focus on a region of a soundfield

- push: push a soundfield in a direction

The imaging tools are provided in two forms: static and dynamic implementations. While most transforms are provided in both categories, a number are found in only one guise.3

See FoaTransform, FoaXform and FoaXformerMatrix for more details about imaging.

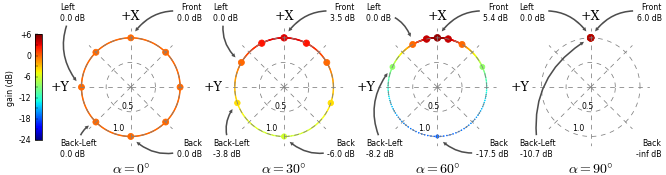

Reading imaging illustrations

As artists working with sound, we should expect most users to be very capable expert listeners, and able to audition the results of spatial filtering of an input soundfield. However, it is often very convenient to view a visual representation of the effect of a soundfield transform. The ATK illustrates a number of its included transforms in the form shown below.

The X-Y axis is shown, as viewed from above with the top of the plot corresponding to the front of the soundfield, +X. On the left hand side of the figures, an unchanged soundfield composed of eight planewave is shown. These are indicated as coloured circles, and arrive from cardinal directions:

- Front

- Front-Left

- Left

- Back-Left

- Back

- Back-Right

- Right

- Front-Right

Three useful features are displayed in these plots, providing inportant insight as to how an input soundfield will be modified by a transform:

- incidence4 : illustrated as polar angle

- quality5 : illustrated as radius

- gain6 : illustrated via colour

Individual planewaves are coloured with respect to the gain scale at the far left of the figures. Additionally, Front, Left, Back-Left and Back are annotated with a numerical figure for gain, in dB.

Soundfield quality

The meaning of transformation to soundfield incidence and gain should be clear. Understading the meaning of soundfield quality takes a little more effort. This feature describes how apparently localised an element in some direction will appear.

A planewave has a quality measure of 1.0, and is heard as localised. At the opposite end of the scale, with a quality measure of 0.0, is an omnidirectional soundfield. This is heard to be without direction or "in the head". As you'd expect, intermediate measures are heard as scaled between these two extremes.

Reading ZoomX

ZoomX imaging

With changing ZoomX's angle, we see the eight cardinal planewaves both move towards the front of the image and change gain. When angle is 90 degrees, the gain of the planewave at Front has been increased by 6dB, while Back has disappeared.7 We also see the soundfield appears to have collapsed to a single planewave, incident at 0 degrees.8

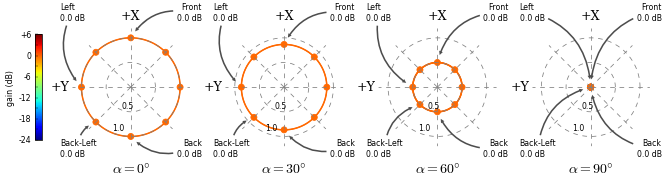

Reading PushX

PushX imaging

PushX also distorts the incident angles of the cardinal planewaves. However, here we see two differences with ZoomX. The gains of the individual elements don't vary as considerably. More intriguingly, a number of the encoded planewaves are illustrated as moving off the perimeter of the plot, indicating a change in soundfield quality.

Moving from the edge of the plot towards the centre does not imply the sound moves from the edge of the loudspeakers towards the centre, as one might expect. Instead, what we are seeing is the loss of directivity. Moving towards the centre means a planewave moves toward becoming omnidirectional, or without direction. (This becomes clearer when we look at DirectO.) A reducing radius indicates a reducing soundfield quality.

When PushX's angle is 90 degrees, all encoded planewaves have been pushed to the front of the image. Unlike ZoomX, gain is retained at 0 dB for all elements.9

Reading DirectO

DirectO imaging

DirectO adjusts the directivity of the soundfield across the origin. Here we see the cardinal planewaves collapsing towards the centre of the plot. At this point the soundfield is omnidirectional, or directionless. See further discussion of soundfield quality above.

See FoaZoomX, FoaPushX and FoaDirectO for more details regarding use of these associated UGens.

Illustrated transforms

Additionally, the following UGens include figures illustration imaging transformation:

Explore these to get a sense of the wide variety of image transformation tools available in the ATK.

Monitoring

Perhaps one of the most celebrated aspects of the Ambisonic sound technique has been its design as a hierarchal reproduction system, able to target a number of varying loudspeaker arrays. Users may be familiar with SuperCollider's inbuilt regular polygon decoder, DecodeB2.

The ATK provides a much wider palate of optimised monitoring tools via FoaDecode. These include:

- stereo UHJ: classic Ambisonic stereo decoding

- binaural: measured and synthetic HRTFs

- regular 2D & 3D: single and dual polygons

- diametric 2D & 3D: flexible semi-regular arrays

- 5.0: Wiggins optimised decoders

While the regular decoders will be suitable for many users, diametric decoding enables the greatest flexibility, and allows the user to design substantially varying semi-regular arrays suitable for a wide variety of playback situations.

See FoaDecode, FoaDecoderMatrix and FoaDecoderKernel for more details about decoding.

Installation

The ATK library for SuperCollider3 is distributed via the sc3-plugins project. If you're reading this document, these extensions have most likely been corectly installed.

Additionally, the ATK has a number of dependencies. Please install the following:

- MathLib Quark.

- FileLog Quark.

- ATK Kernels

- ATK Example Soundfiles

For Quark installation, see: Using Quarks and the Quarks for SuperCollider project.

Examples

The examples below are intended to briefly illustrate playback and imaging of soundfields with the ATK. The user is encouraged to explore the ATK's documentation to gain a deeper understanding of the flexibility of these tools.

As the Ambisonic technique is a hierarchal system, numerous options for playback are possible. These include two channel stereo, two channel binaural, pantophonic and full 3D periphonic. With the examples below, we'll take advantage of this by first choosing a suitable decoder with with to audition.

Choose a decoder

A number of decoders are defined below. Please choose one suitable for your system. Also, don't forget to define ~renderDecode !

// ------------------------------------------------------------

// boot server

(

s = Server.default;

s.boot;

)

// ------------------------------------------------------------

// define convenience function to verify number of server outputs

(

~checkMyServerOutputs = { arg server, decoder;

server.serverRunning.if({

(decoder.numOutputs > server.options.numOutputBusChannels).if({

"Number of Server output bus channels is less than number required by Decoder!".warn;

"Server Outputs: %\n".postf(server.options.numOutputBusChannels);

"Decoder Outputs: %\n\n".postf(decoder.numOutputs);

"Update number of Server outputs as illustrated here: ".post;

"http://doc.sccode.org/Classes/ServerOptions.html#examples".postln;

}, {

"Server has an adequate number of output bus channels for use with this Decoder!".postln;

})

})

}

)

// ------------------------------------------------------------

// choose a decoder

// stereophonic / binaural

~decoder = FoaDecoderMatrix.newStereo((131/2).degrad, 0.5) // Cardioids at 131 deg

~decoder = FoaDecoderKernel.newUHJ // UHJ (kernel)

~decoder = FoaDecoderKernel.newSpherical // synthetic binaural (kernel)

~decoder = FoaDecoderKernel.newCIPIC // KEMAR binaural (kernel)

// pantophonic (2D)

~decoder = FoaDecoderMatrix.newQuad(k: 'dual') // psycho optimised quad

~decoder = FoaDecoderMatrix.newQuad(pi/6, 'dual') // psycho optimised narrow quad

~decoder = FoaDecoderMatrix.new5_0 // 5.0

~decoder = FoaDecoderMatrix.newPanto(6, k: 'dual') // psycho optimised hex

// periphonic (3D)

~decoder = FoaDecoderMatrix.newPeri(k: 'dual') // psycho optimised cube

~decoder = FoaDecoderMatrix.newDiametric( // psycho optimised bi-rectangle

[[30, 0], [-30, 0], [90, 35.3], [-90, 35.3]].degrad,

'dual'

)

// inspect

~decoder.kind

~checkMyServerOutputs.value(s, ~decoder)

// ------------------------------------------------------------

// define ~renderDecode

(

~renderDecode = { arg in, decoder;

var kind;

var fl, bl, br, fr;

var fc, lo;

var sl, sr;

var flu, blu, bru, fru;

var fld, bld, brd, frd;

var slu, sru, sld, srd;

kind = decoder.kind;

case

{ decoder.numChannels == 2 }

{

// decode to stereo (or binaural)

FoaDecode.ar(in, decoder)

}

{ kind == 'quad' }

{

// decode (to quad)

#fl, bl, br, fr = FoaDecode.ar(in, decoder);

// reorder output to match speaker arrangement

[fl, fr, bl, br]

}

{ kind == '5.0' }

{

// decode (to 5.0)

#fc, fl, bl, br, fr = FoaDecode.ar(in, decoder);

lo = Silent.ar;

// reorder output to match speaker arrangement

[fl, fr, fc, lo, bl, br]

}

{ kind == 'panto' }

{

// decode (to hex)

#fl, sl, bl, br, sr, fr = FoaDecode.ar(in, decoder);

// reorder output to match speaker arrangement

[fl, fr, sl, sr, bl, br]

}

{ kind == 'peri' }

{

// decode (to cube)

#flu, blu, bru, fru, fld, bld, brd, frd = FoaDecode.ar(in, decoder);

// reorder output to match speaker arrangement

[flu, fru, blu, bru, fld, frd, bld, brd]

}

{ kind == 'diametric' }

{

// decode (to bi-rectangle)

#fl, fr, slu, sru, br, bl, srd, sld = FoaDecode.ar(in, decoder);

// reorder output to match speaker arrangement

[fl, fr, bl, br, slu, sru, sld, srd]

};

}

)

// ------------------------------------------------------------

// now we're ready to try the examples below!

// ------------------------------------------------------------

Produced via the Ambisonic Toolkit

The following three sound examples are excerpts from recordings produced via the ATK.

If you haven't already choosen a ~decoder and defined ~renderDecode, do so now.

// ------------------------------------------------------------

// B-format examples, produced via the ATK

// B-format soundfile read from disk

// read a whole sound into memory

// remember to free the buffer later!

// (boot the server, if you haven't!)

~sndbuf = Buffer.read(s, Atk.userSoundsDir ++ "/b-format/Anderson-Pacific_Slope.wav")

~sndbuf = Buffer.read(s, Atk.userSoundsDir ++ "/b-format/Howle-Calling_Tunes.wav")

~sndbuf = Buffer.read(s, Atk.userSoundsDir ++ "/b-format/Pampin-On_Space.wav")

(

{

var sig; // audio signal

// display encoder and decoder

"Ambisonic decoding via % decoder".format(~decoder.kind).postln;

// ------------------------------------------------------------

// test sig

sig = PlayBuf.ar(~sndbuf.numChannels, ~sndbuf, BufRateScale.kr(~sndbuf), doneAction:2); // soundfile

// ------------------------------------------------------------

// decode (via ~renderDecode)

~renderDecode.value(sig, ~decoder)

}.scope;

)

// free buffer

~sndbuf.free

// ------------------------------------------------------------

- Joseph Anderson, "Pacific Slope," Epiphanie Sequence, Sargasso SCD28056

- Tim Howle, "Calling Tunes," 20 Odd Years, FMR FMRCD316-0711

- Juan Pampin, "On Space," Les Percussions de Strasbourg 50th Anniversary Edition, Classics Jazz France 480 6512

Natural soundfields, with imaging transforms

This example illustrates the application of various spatial filtering transforms to natural soundfields, recorded via the Soundfield microphone.

The soundfield is controlled by MouseY, which specifies the transform angle argument (90 deg to 0 deg; top to bottom of display). With the mouse at the bottom of the display, the recorded soundfield is unchanged. At the top, the transform is at its most extreme.

If you haven't already choosen a ~decoder and defined ~renderDecode, do so now.

// ------------------------------------------------------------

// B-format examples, natural soundfield with imaging transform

// B-format soundfile read from disk

// choose transformer

~transformer = 'zoomX'

~transformer = 'pushX'

~transformer = 'directO'

// read a whole sound into memory

// remember to free the buffer later!

// (boot the server, if you haven't!)

~sndbuf = Buffer.read(s, Atk.userSoundsDir ++ "/b-format/Hodges-Purcell.wav")

~sndbuf = Buffer.read(s, Atk.userSoundsDir ++ "/b-format/Leonard-Orfeo_Trio.wav")

~sndbuf = Buffer.read(s, Atk.userSoundsDir ++ "/b-format/Courville-Dialogue.wav")

~sndbuf = Buffer.read(s, Atk.userSoundsDir ++ "/b-format/Leonard-Chinook.wav")

~sndbuf = Buffer.read(s, Atk.userSoundsDir ++ "/b-format/Leonard-Fireworks.wav")

~sndbuf = Buffer.read(s, Atk.userSoundsDir ++ "/b-format/Anderson-Nearfield.wav")

(

{

var sig; // audio signal

var angle; // angle control

// display transformer & decoder

"Ambisonic transforming via % transformer".format(~transformer).postln;

"Ambisonic decoding via % decoder".format(~decoder.kind).postln;

// gain ---> top = 90 deg

// bottom = 0 deg

angle = MouseY.kr(pi/2, 0);

// ------------------------------------------------------------

// test sig

sig = PlayBuf.ar(~sndbuf.numChannels, ~sndbuf, BufRateScale.kr(~sndbuf), doneAction:2); // soundfile

// ------------------------------------------------------------

// transform

sig = FoaTransform.ar(sig, ~transformer, angle);

// ------------------------------------------------------------

// decode (via ~renderDecode)

~renderDecode.value(sig, ~decoder)

}.scope;

)

// free buffer

~sndbuf.free

// ------------------------------------------------------------

- P. Hodges, "Purcell - Passacaglia (King Arthur)," Sound of Space: ambisonic surround sound. [Online]. Available: http://soundofspace.com/ambisonic_files/52 [Accessed: 03-Nov-2011].

- J. Leonard, "The Orfeo Trio & TetraMic," Sound of Space: ambisonic surround sound. [Online]. Available: http://soundofspace.com/ambisonic_files/41 [Accessed: 03-Nov-2011].

- D. Courville, "Comparative Surround Recording," Ambisonic Studio | Comparative Surround Recording, 2007. [Online]. Available: http://www.radio.uqam.ca/ambisonic/comparative_recording.html [Accessed: 26-Jul-2011].

- J. Leonard, ""A couple of Chinook helicopters," Sound of Space: ambisonic surround sound, 20-Mar-2008. [Online]. Available: http://soundofspace.com/ambisonic_files/47. [Accessed: 03-Nov-2011].

- J. Leonard, “Fireworks,” Sound of Space: ambisonic surround sound, 25-Aug-2009. [Online]. Available: http://soundofspace.com/ambisonic_files/37. [Accessed: 03-Nov-2011].

- Joseph Anderson, "Nearfield source," [unpublished recording]

link::Guides/Intro-to-the-ATK::

sc version: 3.9dev